这里将实现神经网络的误差反传训练算法,并应用它进行手写数字识别。相关数据文件:PRML_NN_data.mat和PRML_NN_weights.mat.

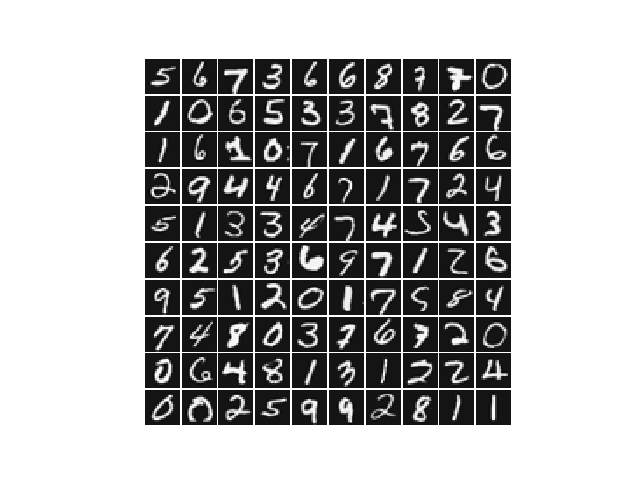

数据可视化

本次练习所用的数据集有5000个训练样本,每个样本对应于20x20大小的灰度图像。这些训练样本包括了9-0共十个数字的手写图像。这些样本中每个像素都用浮点数表示。加载得到的数据中,每幅图像都被展开为一个400维的向量,构成了数据矩阵中的一行。完整的训练数据是一个5000x400的矩阵,其每一行为一个训练样本(数字的手写图像)。数据中,对应于数字”0”的图像被标记为”10”,而数字”1”到”9”按照其自然顺序被分别标记为”1”到”9”。

程序的第一部分将加载数据,显示如下:

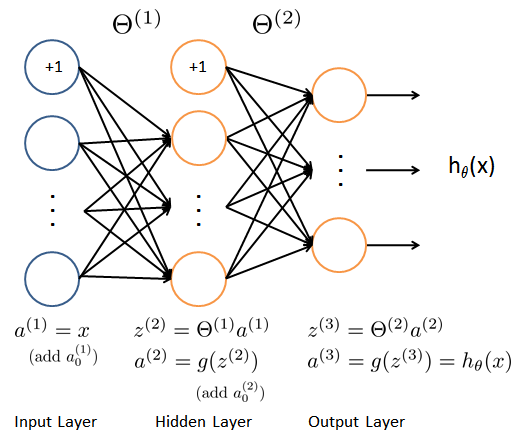

模型表示:

准备训练的神经网络是一个三层的结构,一个输入层,一个隐层以及一个输出层。由于我们训练样本(图像)是20x20的,所以输入层单元数为400(不考虑额外的偏置项,如果考虑单元个数需要+1)。在我们的程序中,数据会被加载到变量X和y里。

这里提供了一组训练好的网络参数$(\Theta^{(1)}, \Theta^{(2)})$。这些数据存储在数据文件PRML_NN_weights.mat中,在程序中被加载到变量 Theta1 和 Theta2 中。参数的维度对应于第二层有25个单元、10个输出单元(对应于10个数字的类别)的网络。

前向传播与代价函数

现在你需要实现神经网络的代价函数及其梯度。首先需要使得函数nn_cost_function能够返回正确的代价值。

神经网络的代价函数(不包括正则化项)的定义为:

其中$h_{\theta}(x^{(i)})$的计算如神经网络结构图所示,K=10是所以可能的类别数。这里的$y$使用了 ont-hot 的表达式。

运行程序,使用预先训练好的网络参数,确认你得到的代价函数是正确的。(正确的代价约为0.287629)。

代价函数的正则化

神经网络包括正则化项的代价函数为:

对应于偏置项的参数不能包括在正则化项中。对于矩阵 Theta1 与 Theta2 而言,这些项对应于矩阵的第一列。运行程序,使用预先训练好的权重数据,设置正则化系数$\lambda=1$(lmb)确认代价函数是正确的(0.383770)。

误差反传训练算法

sigmoid函数及其梯度

sigmoid函数定义为:

sigmoid函数的梯度可以按照下式进行计算:

为了验证是否正确,当z=0时,梯度的精确值为0.25,当z很大时(可为负值),梯度值接近为0。

网络参数的随机初始化

训练神经网络时,使用随机数初始化网络参数非常重要。一个非常有效的书籍初始化策略为,在范围$[ -\epsilon_{init}, \epsilon_{init} ]$内按照均匀分布随机选择参数$\Theta^{(l)}$的初始值。这里设置$\epsilon_{init} = 0.12$,这样能保证参数较小且训练过程高效。

对于一般的神经网络,如果第$l$层的输入单元数为$L_{in}$,输出单元数为$L_{out}$,则$\epsilon_{init} = {\sqrt{6}}/{\sqrt{L_{in} + L_{out}}}$可以做为有效的指导策略。

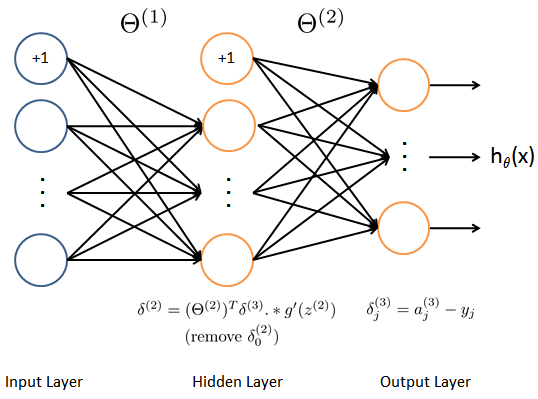

BP算法

这里将实现误差反传训练算法(Backpropagation)。误差反传算法的思想大致可以描述如下:

对于一个训练样本$(x^{(t)}, y^{(t)})$,我们首先使用前向传播计算网络中所有单元(神经元)的激活值,包括假设输出$h_{\Theta}(x)$。那么,对于第$l$层的第j个节点,我们期望计算出一个误差项$\delta_{j}^{(l)}$用于衡量该节点对于输出的误差的贡献。

对于输出节点,我们可以直接计算网络的激活值与真实目标值之间的误差。对于我们所训练的第3层为输出层的网络,这个误差定义为$\delta_{j}^{(3)}$。对于隐层单元,需要根据第$l+1$层的节点的误差的加权平均来计算$\delta_{j}^{(l)}$。

-

设输入层的值 $a^{(1)}$ 为第 $t$ 个训练样本 $x^{(t)}$ 。执行前向传播,计算第2层与第3层各节点的激活值( $z^{(2)}, a^{(2)}, z^{(3)}, a^{(3)}$ )。注意你需要在 $a^{(1)}$ 与 $a^{(2)}$ 增加一个全部为 +1 的向量,以确保包括了偏置项。在

numpy中可以使用函数ones,hstack,vstack等完成(向量化版本)。 - 对第3层中的每个输出单元 $k$ ,计算

\[\delta_{k}^{(3)} = a_{k}^{(3)} - y_k\] 其中 $y_k \in {0, 1}$ 表示当前训练样本是否是第 $k$ 类。

- 对隐层 $l=2$ , 计算

\[\delta^{(2)} = \left( \Theta^{(2)} \right)^T \delta^{(3)} .* g^{\prime} (z^{(2)})\] 其中 $g^{\prime}$ 表示 Sigmoid 函数的梯度,

.*在numpy中是通 常的逐个元素相乘的乘法,矩阵乘法应当使用numpy.dot函数。 - 使用下式将当前样本梯度进行累加:

\[\Delta^{(l)} = \Delta^{(l)} + \delta^{(l+1)}(a^{(l)})^T\] 在

numpy中,数组可以使用+=运算。 - 计算神经网络代价函数的(未正则化的)梯度,

\[\frac{\partial}{\partial \Theta_{ij}^{(l)}} J(\Theta) = D_{ij}^{(l)} = \frac{1}{m} \Delta_{ij}^{(l)}\]

检测梯度

在神经网络中,需要最小化代价函数 $J(\Theta)$ 。为了检查梯度计算是否正确,考虑把参数 $\Theta^{(1)}$ 和 $\Theta^{(2)}$ 展开为一个长的向量 $\theta$ 。假设函数 $f_i(\theta)$ 表示 $\frac{\partial}{\partial \theta_i} J(\theta)$ 。

令

上式中, $\theta^{(i+)}$ 除了第 $i$ 个元素增加了 $\epsilon$ 之 外,其他元素均与 $\theta$ 相同。类似的, $\theta^{(i-)}$ 中仅第 $i$ 个元素减少了 $\epsilon$ 。可以使用数值近似验证 $f_i(\theta)$ 计算是否正确:

如果设 $\epsilon=10^{-4}$ ,通常上式左右两端的差异出现于第4位有效数字之后(经常会有更高的精度)。

这里创建了一个较小的神经网络用于检测你的误差反传训练算法所计算得到的梯度是否正确。如果你的实现是正确的,你得到的 梯度与数值梯度之后的绝对误差(各分量的绝对值差之和)应当小于 $10^{-9}$ 。

神经网络的正则化

假设你在误差反传算法中计算了 $\Delta_{ij}^{(l)}$ ,你需要增加的正则化项为

注意你不应该正则化 $\Theta^{(l)}$ 的第一列,因其对应于偏置项。

使用fmin_cg学习网络参数

在训练完成后,程序会汇报在训练集上的正确率。如果你的实现正确,得到的正确率应该在 95.4% 左右(由于随机初始化的原因可能有 1% 变化)。

你可以调整正则化参数 $\lambda$ (lmb) 以及优化算法的最大迭代次数(如设 maxiter = 400 ),来观察各参数对训练过程和结果的影响。

可视化隐层

对于我们学得的神经网络,一种可视化其隐层所学得的“表示”的方式是将除偏置单元外的 400 维向量转换为 20x20 的图像并显示出来。

程序代码

# -*- coding: utf-8 -*-

from __future__ import print_function

import numpy as np

import scipy.io as sio

from scipy.optimize import fmin_cg

import matplotlib.pyplot as plt

def display_data(data, img_width=20):

"""将图像数据 data 按照矩阵形式显示出来"""

plt.figure()

# 计算数据尺寸相关数据

n_rows, n_cols = data.shape

img_height = n_cols // img_width

# 计算显示行数与列数

disp_rows = int(np.sqrt(n_rows))

disp_cols = (n_rows + disp_rows - 1) // disp_rows

# 图像行与列之间的间隔

pad = 1

disp_array = np.ones((pad + disp_rows*(img_height + pad),

pad + disp_cols*(img_width + pad)))

idx = 0

for row in range(disp_rows):

for col in range(disp_cols):

if idx > m:

break

# 复制图像块

rb = pad + row*(img_height + pad)

cb = pad + col*(img_width + pad)

disp_array[rb:rb+img_height, cb:cb+img_width] = data[idx].reshape((img_height, -1), order='F')

# 获得图像块的最大值,对每个训练样本分别归一化

max_val = np.abs(data[idx].max())

disp_array[rb:rb+img_height, cb:cb+img_width] /= max_val

idx += 1

plt.imshow(disp_array)

plt.gray()

plt.axis('off')

plt.savefig('data-array.png', dpi=150)

plt.show()

def nn_cost_function(nn_params, *args):

"""Cost function of neural network.

:param nn_params: parameters (weights) of the neural netwrok.

It is a 1D array including weights of all layers.

:returns: J, the cost of the neural network.

:rtype: float

"""

# Unpack parameters from *args

input_layer_size, hidden_layer_size, num_labels, lmb, X, y = args

# Unroll weights of neural networks from nn_params

Theta1 = nn_params[0:hidden_layer_size*(input_layer_size + 1)]

Theta1 = Theta1.reshape((hidden_layer_size, input_layer_size + 1))#25*401

Theta2 = nn_params[hidden_layer_size*(input_layer_size + 1):]

Theta2 = Theta2.reshape((num_labels, hidden_layer_size + 1))#10*26

# Setup some useful variables

m = X.shape[0]

# You need to return the following variable correctly

J = 0.0

# ====================== YOUR CODE HERE ======================

y1 = np.zeros((m,num_labels))

for i in range(m):

y1[i,y[i]-1] = 1#one-hot型

#X.shape[0]=5000,shape[1]=400

#y.shape[0]=5000, shape[1]=1

X = np.hstack((np.ones((m, 1)), X))

Z = sigmoid(np.dot(X, np.transpose(Theta1)))

Z = np.hstack((np.ones((m, 1)), Z))

h = sigmoid(np.dot(Z,np.transpose(Theta2)))

# Regularization Term

J = np.sum(-y1*np.log(h)-(1-y1)*np.log(1-h))/m + lmb/(2*m)*(np.sum(Theta1[:,1:]**2)+np.sum(Theta2[:,1:]**2))

# ================== END OF YOUR CODE HERE ===================

return J

def nn_grad_function(nn_params, *args):

"""Gradient of the cost function of neural network.

:param nn_params: parameters (weights) of the neural netwrok.

It is a 1D array including weights of all layers.

:returns: grad, the gradient of the cost of the neural network.

:rtype: float

"""

# Unpack parameters from *args

input_layer_size, hidden_layer_size, num_labels, lmb, X, y = args

# Unroll weights of neural networks from nn_params

Theta1 = nn_params[:hidden_layer_size*(input_layer_size + 1)]

Theta1 = Theta1.reshape((hidden_layer_size, input_layer_size + 1))#25*401

Theta2 = nn_params[hidden_layer_size*(input_layer_size + 1):]

Theta2 = Theta2.reshape((num_labels, hidden_layer_size + 1))#10*26

# Setup some useful variables

m = X.shape[0]

# ====================== YOUR CODE HERE ======================

y1 = np.zeros((m,num_labels))

for i in range(m):

y1[i,y[i]-1] = 1

a1 = np.hstack((np.ones((m, 1)), X))#5000x401

z2 = np.dot(a1,np.transpose(Theta1))#5000x25

a2 = sigmoid(z2)

a2 = np.hstack((np.ones((m,1)), a2)) #5000x26

z3 = np.dot(a2, np.transpose(Theta2)) #5000x10

a3 = sigmoid(z3) #5000x10 第三层激活值

Delta_l2 = np.zeros((num_labels, hidden_layer_size + 1))

Delta_l1 = np.zeros((hidden_layer_size,input_layer_size + 1))

delta_l3 = a3 - y1 #5000x10

gz2 = np.hstack((np.zeros((m,1)),sigmoid_gradient(z2)))

delta_l2 = np.dot(delta_l3,Theta2)*gz2 #5000x26

delta_l2 = delta_l2[:,1:]

Delta_l2 = Delta_l2 + np.dot(np.transpose(delta_l3),a2) #10x26

Delta_l1 = Delta_l1 + np.dot(np.transpose(delta_l2),a1) #25x401

theta1 = np.hstack((np.zeros((hidden_layer_size,1)) , Theta1[:,1:]))

theta2 = np.hstack((np.zeros((num_labels,1)) , Theta2[:,1:]))

Theta2_grad = Delta_l2/m + lmb/m*theta2

Theta1_grad = Delta_l1/m + lmb/m*theta1

# ================== END OF YOUR CODE HERE ===================

# Unroll gradients

grad = np.hstack((Theta1_grad.flatten(), Theta2_grad.flatten()))

return grad

def sigmoid(z):

"""Sigmoid function"""

return 1.0/(1.0 + np.exp(-np.asarray(z)))

def sigmoid_gradient(z):

"""Gradient of sigmoid function."""

g = np.zeros_like(z)

# ====================== YOUR CODE HERE ======================

g = sigmoid(z)*(1-sigmoid(z))

# ================== END OF YOUR CODE HERE ===================

return g

def rand_initialize_weights(L_in, L_out):

"""Randomly initialize the weights of a layer with L_in incoming

connections and L_out outgoing connections"""

# You need to return the following variables correctly

W = np.zeros((L_out, 1 + L_in))

# ====================== YOUR CODE HERE ======================

epsilon_init = (6.0/(L_out+L_in))**0.5

W = np.random.rand(L_out,1+L_in)*2*epsilon_init-epsilon_init

# np.random.rand(L_out,1+L_in)产生L_out*(1+L_in)大小的随机矩阵

# ================== END OF YOUR CODE HERE ===================

return W

def debug_initialize_weights(fan_out, fan_in):

"""Initalize the weights of a layer with

fan_in incoming connections and

fan_out outgoing connection using a fixed strategy."""

W = np.linspace(1, fan_out*(fan_in+1), fan_out*(fan_in+1))

W = 0.1*np.sin(W).reshape(fan_out, fan_in + 1)

return W

def compute_numerical_gradient(cost_func, theta):

"""Compute the numerical gradient of the given cost_func

at parameter theta"""

numgrad = np.zeros_like(theta)

perturb = np.zeros_like(theta)

eps = 1.0e-4

for idx in range(len(theta)):

perturb[idx] = eps

loss1 = cost_func(theta - perturb)

loss2 = cost_func(theta + perturb)

numgrad[idx] = (loss2 - loss1)/(2*eps)

perturb[idx] = 0.0

return numgrad

def check_nn_gradients(lmb=0.0):

"""Creates a small neural network to check the backgropagation

gradients."""

input_layer_size, hidden_layer_size = 3, 5

num_labels, m = 3, 5

Theta1 = debug_initialize_weights(hidden_layer_size, input_layer_size)

Theta2 = debug_initialize_weights(num_labels, hidden_layer_size)

X = debug_initialize_weights(m, input_layer_size - 1)

y = np.array([1 + (t % num_labels) for t in range(m)])

nn_params = np.hstack((Theta1.flatten(), Theta2.flatten()))

cost_func = lambda x: nn_cost_function(x,

input_layer_size,

hidden_layer_size,

num_labels, lmb, X, y)

grad = nn_grad_function(nn_params,

input_layer_size, hidden_layer_size,

num_labels, lmb, X, y)

numgrad = compute_numerical_gradient(cost_func, nn_params)

print(np.vstack((numgrad, grad)).T, np.sum(np.abs(numgrad - grad)))

print('The above two columns you get should be very similar.')

print('(Left-Your Numerical Gradient, Right-Analytical Gradient)')

def predict(Theta1, Theta2, X):

"""Make prediction."""

# Useful values

m = X.shape[0]

# num_labels = Theta2.shape[0]

# You need to return the following variables correctly

p = np.zeros((m,1), dtype=int)

# ====================== YOUR CODE HERE ======================

'''正向传播,预测结果'''

X = np.hstack((np.ones((m,1)),X))

h1 = sigmoid(np.dot(X,np.transpose(Theta1)))

h1 = np.hstack((np.ones((m,1)),h1))

h2 = sigmoid(np.dot(h1,np.transpose(Theta2)))

'''

返回h中每一行最大值所在的列号

- np.max(h, axis=1)返回h中每一行的最大值(是某个数字的最大概率)

- 最后where找到的最大概率所在的列号(列号即是对应的数字)

'''

'''

p = np.array(np.where(h2[0,:] == np.max(h2, axis=1)[0]))

for i in np.arange(1, m):

t = np.array(np.where(h2[i,:] == np.max(h2, axis=1)[i]))

p = np.vstack((p,t))

'''

# ================== END OF YOUR CODE HERE ===================

# print(h1.shape, h2.shape)

p = np.argmax(h2, axis=1) + 1.0

return p

# Parameters

input_layer_size = 400 # 20x20 大小的输入图像,图像内容为手写数字

hidden_layer_size = 25 # 25 hidden units

num_labels = 10 # 10 类标号 从1到10

# =========== 第一部分 ===============

# 加载训练数据

print("Loading and Visualizing Data...")

data = sio.loadmat('PRML_NN_data.mat')

X, y = data['X'], data['y']

m = X.shape[0]

# 随机选取100个数据显示

rand_indices = list(range(m))

np.random.shuffle(rand_indices)

X_sel = X[rand_indices[:100]]

display_data(X_sel)

# =========== 第二部分 ===============

print('Loading Saved Neural Network Parameters ...')

# Load the weights into variables Theta1 and Theta2

data = sio.loadmat('PRML_NN_weights.mat')

Theta1, Theta2 = data['Theta1'], data['Theta2']

# print Theta1.shape, (hidden_layer_size, input_layer_size + 1)

# print Theta2.shape, (num_labels, hidden_layer_size + 1)

# ================ Part 3: Compute Cost (Feedforward) ================

# To the neural network, you should first start by implementing the

# feedforward part of the neural network that returns the cost only. You

# should complete the code in nnCostFunction.m to return cost. After

# implementing the feedforward to compute the cost, you can verify that

# your implementation is correct by verifying that you get the same cost

# as us for the fixed debugging parameters.

#

# We suggest implementing the feedforward cost *without* regularization

# first so that it will be easier for you to debug. Later, in part 4, you

# will get to implement the regularized cost.

print('Feedforward Using Neural Network ...')

# Weight regularization parameter (we set this to 0 here).

lmb = 0.0

nn_params = np.hstack((Theta1.flatten(), Theta2.flatten()))

J = nn_cost_function(nn_params,

input_layer_size, hidden_layer_size,

num_labels, lmb, X, y)

print('Cost at parameters (loaded from PRML_NN_weights): %f ' % J)

print('(this value should be about 0.287629)')

# =============== Part 4: Implement Regularization ===============

print('Checking Cost Function (w/ Regularization) ... ')

lmb = 1.0

J = nn_cost_function(nn_params,

input_layer_size, hidden_layer_size,

num_labels, lmb, X, y)

print('Cost at parameters (loaded from PRML_NN_weights): %f ' % J)

print('(this value should be about 0.383770)')

# ================ Part 5: Sigmoid Gradient ================

print('Evaluating sigmoid gradient...')

g = sigmoid_gradient([1, -0.5, 0, 0.5, 1])

print('Sigmoid gradient evaluated at [1 -0.5 0 0.5 1]: ', g)

# ================ Part 6: Initializing Pameters ================

print('Initializing Neural Network Parameters ...')

initial_Theta1 = rand_initialize_weights(input_layer_size, hidden_layer_size)

initial_Theta2 = rand_initialize_weights(hidden_layer_size, num_labels)

# Unroll parameters

initial_nn_params = np.hstack((initial_Theta1.flatten(),

initial_Theta2.flatten()))

# =============== Part 7: Implement Backpropagation ===============

print('Checking Backpropagation... ')

# Check gradients by running checkNNGradients

check_nn_gradients()

# =============== Part 8: Implement Regularization ===============

print('Checking Backpropagation (w/ Regularization) ... ')

# Check gradients by running checkNNGradients

lmb = 3.0

check_nn_gradients(lmb)

# =================== Part 8: Training NN ===================

print('Training Neural Network...')

lmb, maxiter = 1.0, 500

args = (input_layer_size, hidden_layer_size, num_labels, lmb, X, y)

nn_params, cost_min, _, _, _ = fmin_cg(nn_cost_function,

initial_nn_params,

fprime=nn_grad_function,

args=args,

maxiter=maxiter,

full_output=True)

Theta1 = nn_params[:hidden_layer_size*(input_layer_size + 1)]

Theta1 = Theta1.reshape((hidden_layer_size, input_layer_size + 1))

Theta2 = nn_params[hidden_layer_size*(input_layer_size + 1):]

Theta2 = Theta2.reshape((num_labels, hidden_layer_size + 1))

# ================= Part 9: Visualize Weights =================

print('Visualizing Neural Network... ')

display_data(Theta1[:, 1:])

# ================= Part 10: Implement Predict =================

pred = predict(Theta1, Theta2, X)

# print(pred.shape, y.shape)

# print(np.hstack((pred, y)))

print('Training Set Accuracy:', np.mean(pred == y[:, 0])*100.0)

#

# PRML_Neural_Networks.py ends here